You know those recipe blogs where you apparently have to read the author’s memoirs before you can actually find the recipe and just get on with making dinner? Yeah, this isn’t one of those. The short version is that I went full nerd and made a weighted scorecard for all the major music streaming services. You can get a copy here.

To use it, adjust the numbers in the “Weight %” column to account for what’s more or less important to you. If the “Weight %” cell turns green and the number to the left is 0.00 you’re good to go, otherwise adjust individual weight scores until you meet that condition. Then scroll down to the “Weighted Ranking” chart to see the music streaming service that’s right for you!

Go ahead, play around with it! If you break anything you can always just grab a fresh copy.

Ok, eventually you’re bound to have some questions like, “What’s included in all these different criteria?” or, “What do some of the scores mean?” Or maybe even, “Wait, what streaming service do you use?” Let’s break things down a little.

how to compare all the different music streaming services

Who remembers “The Promotion” episode of The Office in which Michael and Jim determine performance-based raises by placing beans on employees’ pictures? Honestly, that’s not far off from what I’ve done here, but I don’t want you to end up like Kevin, yelling, “WHAT DOES A BEAN MEAN?!” so let’s go through each criterion, what factored into each of them, and how scoring was applied. After that, we’ll look at some of the different features in the spreadsheet, discuss a few practical ways to approach weighting to find your ideal streaming service, and finally look at my personal pick.

If you’d like to jump to a particular section, you can use the links below.

Scoring all the criteria

This all started when I decided to reevaluate which music streaming service I was using. I had been a Tidal subscriber for about seven or eight years – having also flirted with Spotify in there for a little while – and thought that with more and more services offering lossless and hi-res tiers, maybe it was time to give some of them a shot and see what they had to offer. However, being the nerd and science guy I am, I didn’t just want to decide based on a gut feeling. I wanted it to be as objective and fair as I could make it. After a little thought, I landed on the following idea: what if I came up with a list of features or criteria that were generally desirable and/or available across all music streaming services and scored them? That would allow me to come up with overall scores for each service in order to rank them.

Of course, the score range would have to be equal for each criterion. If one feature could be worth up to five points, but another only worth up to two, that would skew the scores by making some features weigh more heavily than others. “Then again,” I thought, “maybe some features are more important to me than others. Moreover, maybe different features are more important to other people than they are to me.” That solidified it. If I scored all the criteria on an equal range and then added a weighting factor, different people could adjust the weighting to their preference based on which features are important to them to get personalized ranked scores of all the music streaming services.

After some trial and error, I settled on a score range of 1–3 points for each feature. For features that were basically yes or no binary options, that kept the difference in a total score from getting too large based on one category, while for other categories it allowed some granularity without getting overwhelming. Then, I reached out to my social media networks to make sure I was capturing and including all the kinds of things that were important to them as casual listeners, and they had some great suggestions. In the end, I compiled a list of 25 criteria. Here they are, listed alphabetically, with scoring assignments and what sorts of things were factored into the less straightforward categories.

Free library transfer

Services like Soundiiz and TuneMyMusic can transfer your playlists and favorite artists, albums, and songs from one streaming service to another. Some music streaming services give you a free voucher for a full transfer, others limit it to 500 songs. One point for no free transfer, two for a limited transfer, and three for a full transfer.

Label Support

Like credits and album sorting, browsing by music label can be a great way to find music. One point was awarded if the label is only displayed; two if you can search for labels; three if labels are shown as clickable links.

Local Files

Remember when we all used to download MP3s (that we obviously paid for)? Some nerds even went through the trouble of getting FLAC or ALAC files (totally not anyone in my household though). Some of us still have those music libraries on hard drives and like to be able to play them alongside streamed music. One point for no local file support; two if local files can be loaded but only played back on the device they’re stored on; three points if they get uploaded to the cloud and you can then listen to them on any device as if they were streaming.

Lyrics

Do you like to sing along to your favorite songs without having to wonder whether John Fogerty is saying “There’s a bathroom on the right” or “There’s a bad moon on the rise”? Lyrics support is great for that! One point for no lyrics support; two if it was in some way compromised; three for good lyrics displays that stay in time with the music.

Native Remote Control

Sometimes you want to play music on your laptop or Apple TV, but control it with your phone. Some streaming services allow you to do this right in the main app. Others make you use a separate remote control app. One point for no remote control app; two for a separate app; three if remote control is integrated right into the main app.

Normalization

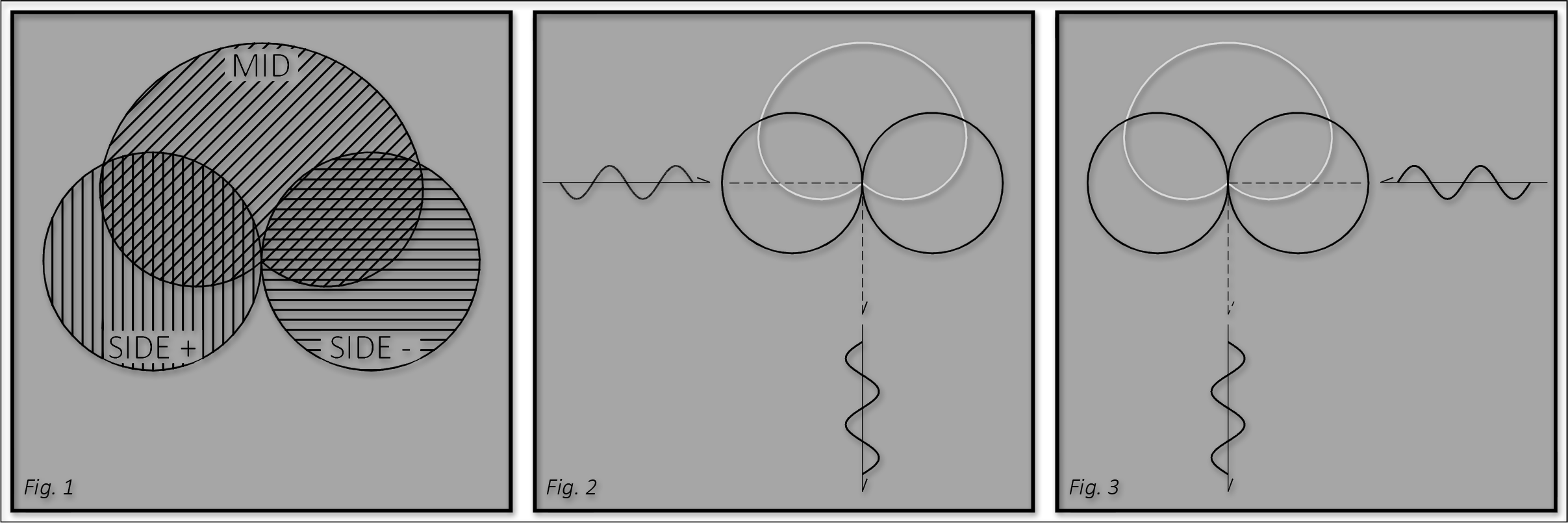

Audio normalization basically automatically adjusts the volume of songs so that you don’t get big jumps in loudness between songs, particularly in playlists. The details on this are nerdy, but essentially there are a few different ways to do it, one of which is more objectively correct (based on listener preference studies). One point for no normalization; two for track-based normalization; three for album-based (2.5 for a context-dependent mix of track and album).

Offline Mode

This one was sort of a freebie. I feel like an offline mode where you can download music to play in airplane mode, or when you don’t have a great signal is an important feature, but all the services support it, so it becomes a little meaningless. One point for no; three for yes. Since everyone got a three you could either weight it low, or swap it out for another feature that I neglected to include.

Playlist Folders

This may also seem a bit niche, but I have lots of playlists. Playlists of artists, playlists of genres, playlists of labels, party music, board game music, and reference tracks. I like to be able to categorize those playlists and put them in folders so I can find them more easily. One point for no playlists folders; two if they’re supported but have to be created in the desktop app; three if they can be created in the mobile app as well.

Private Mode

Discovery algorithms are a cool way to find new artists, albums, etc. but sometimes you don’t want the algorithm to track that you have “Baby Shark” on repeat for your kids in the car, or the white noise or murder podcasts you play to fall asleep to. In its best incarnation, a private mode hides your listening activity from not only any social features built in, but also your play history and recommendations. One point only if there’s no private mode; two points if it exists but doesn’t hide it from your recommendations; three points for the ideal behavior.

Radio

Radio-type features that can either create a “station” of similar music based on a song, artist, or album, or will keep the music going once you get to the end of a playlist or album have become a staple of modern music listening. Often, how well this performs is related to the discovery algorithms, but some services can have good discovery features with limited radio implementations. One point for no radio features; two if they’re limited; three if they’re full-featured and flexible.

Royalties

We talk about this a lot in the music community, but I’m not sure how much it’s discussed outside of that: artists get paid shockingly little for streams of their music. Like cost per month, this is a calculated score based on the reported pay per stream, with the highest-paying service getting three points, the lowest-paying getting one, and the others distributed equally in between. Also like cost per month, if you live outside the US, the royalty rates are likely different so it might be worth looking them up in your country and plugging in the results in columns Q–X.

3rd Party Connect

For hi-fi enthusiasts, it’s often preferable to play back music on a third party device like those from Bluesound, NAD, or Denon. Sometimes that’s done through either AirPlay or Chromecast, but a better solution is to run the streaming app on the device and use a “connect” protocol. I tested this with my BlueSound system, so just because one didn’t work for me doesn’t mean it won’t work for you, but it still deserves a negative mark for it, in my opinion. One point for no 3rd party connectivity; two for a generic protocol like AirPlay or Chromecast; three for bespoke (and functional) protocols.

Other factors

Lastly, it’s worth pointing out that there are plenty of other criteria that are less ubiquitous but may still be important to you. Things like nearby concert listings for your followed artists, a virtual DJ, the ability to collaborate with friends on different streaming services (a la Deezer’s Shaker feature), DJ software integration, sleep timers (for aforementioned white noise and murder podcasts), music videos, karaoke features, podcasts, etc. If one of those is important to you, feel free to replace something like offline mode with your own scores for that category – just don’t mess with cost per month or royalties.

Album Sorting

This one may be a bit niche, but for someone who’s collected many LPs and CDs over the years, and enjoys sorting and re-sorting them based on things like release year, producer, or personnel, it’s really nice when some of this is replicated in a streaming app. A score of one means there’s just one default sort, typically alphabetical by title. Two points were awarded if sorting could be by one criterion (e.g. artist, title, or year, etc.), and three points were available if sorting could be by artist and then year, or artist and then alphabetical, etc. A score of 2.5 indicates either that mobile vs. desktop behavior was different (Apple Music), or that at least when sorting by artist, the sub-sort was by release date (Amazon).

Atmos support

This one is fairly straightforward. One point if Dolby Atmos is not supported at all; two points if Atmos is supported but a proprietary format is used for binaural (e.g. headphone) playback; three points if Atmos is supported and binaural playback uses the native Dolby binaural render.

Atmos/Stereo Switching

Another fairly simple one. One point for non-Atmos platforms; two points if switching between stereo and Atmos involves playing two different versions of the release; three points if there’s a relatively seamless toggle to switch between stereo and Atmos during playback without changing releases.

Audio Quality

More easy stuff. One point for lossy (e.g. “MP3'“ quality, although rarely actually an MP3 codec) streams; two points for CD quality (16-bit, 44.1kHz) lossless, streams; three points for “hi-res” streams, which include 24-bit 44.1kHz and up.

Browsing Experience

I really tried to stay away from subjective ratings, but I suspect it was inevitable at some point, and this is one of them. Points were awarded on a poor/good/excellent basis and included factors such as:

How useful and relevant the homepage feels.

Genre granularity. For example, one, big grouping for “Pop/Rock” isn’t terribly helpful, but getting too granular can also become cumbersome.

Are things like moods and activities included?

Once you navigate to an artist, how easy is it to find different elements of their discography?

Of course, you may feel differently, so if you think I’ve scored a service very differently than you would, you may want to adjust all the scores for this category. Of course, that probably means doing some trials for your top two or three contenders.

Collaborative Playlists

Here’s another objective one. Does the service support playlists that your friends (or clients) can add tracks to? One point for no; two for planned at a future date; and three for yes.

Cost per month

This is another score you may want to update, particularly if you live outside the US, or aren’t interested in annual pricing. However, the scores here are calculated based on the price, with the lowest-priced service getting three points, the highest-priced getting one point, and the others being scaled in between. If you want to update this, don’t change the values in columns D–K, but rather those in columns Q–X, inputting the price in your currency and region.

Credits

Maybe this is more of a thing for people who actually work on music, but I love to be able to see who was involved in a song or album, from musicians to writers to arrangers and producers, all the way through to engineering and artwork disciplines. This score captures how thoroughly and effectively a service displays credits. One point for not at all; two for partial or difficult-to-access listings; three for comprehensive and well organized listings. The one caveat here is that credits have to be supplied when the music is sent for distribution. Labels and indie artists: please include credits when uploading your music!

cross-Device History & Synchronization

This category captures how thoroughly and quickly your listening history is synchronized between devices like your mobile and desktop applications. One point for none; two for slow or partial (e.g. albums or playlists, but not individual songs) sync; three points for instantaneous sync at the song level. In conjunction with “Native Remote Control” this is a good indicator of how easy and seamless it is to switch between devices.

crossFade Support

If you like a seamless, radio-like experience where one song blends into the next, this might be of interest to you. One point was awarded if there was no crossfade support, two if it could be turned on and off with a crossfade time specified, and three if there was a DJ-like automix feature.

Curation & Editorial

This is another one that doubtless has some subjectivity in it. In particular, without using a service extensively for a longer time period, it’s hard to truly get a sense of this, but I’ve done my best. One point for no human-curated playlists or editorial content; two for generic playlists or editorial that feels more like marketing; three for human-curated playlists and good editorial with artists interviews, reviews, etc.

Device Sample Rate Switching

For services that support hi-res files that can be at multiple sample rates (e.g. 44.1 – 192 kHz) it’s nice if they can automatically tell a connected DAC (Digital to Analog Converter) what sample rate to operate at. One point for no sample rate switching; two if it theoretically exists but was nonfunctional in my testing; three points for working sample rate switching.

Discovery

Another tough one that’s a bit subjective and not easy to gauge without at least a few months of testing, discovery scores a number of factors, like: what’s the variety of recommended music based on your listening history and how easy is it to access, how relevant to your taste(s) are the suggestions. Note, this is separate from but related to “radio” – more on that later. Anyhow 1–3 points for poor, good, and excellent discovery.

How to use and interpret the spreadsheet

Now that you understand the criteria and scoring, the simplest approach is to update the weight values in column C based on what’s important to you. We’ll discuss a few approaches to this below, but here’s a little peek behind the curtain of how the weighting scheme works.

With 25 criteria, a total weight of 100% is distributed amongst them. That means, for a flat weighting curve, each criterion gets a weight of 4%. Each weight is then used as a multiplier for all the scores in its row. So, if you say, “Playlist folders don’t matter at all to me” and give that category a weight of 0%, a) I’ll be very sad, and b) all the playlists folder scores will get multiplied by 0, thus making them 0 and therefore not contributing to the overall score whatsoever. Conversely, if you say “Playlist folders are very important to me” and give the category a weight of 8%, a) I’ll hug you, and b) all the playlist folder scores will get multiplied by 8, making them count twice as much as they would for a flat weighting. However, there has to be a give and take here. The sum of all the weights needs to be 100%. Again, a little more on this in the next section. But first, a few other things I think warrant explanation.

Below the list of criteria, you’ll find a dropdown. This allows you to “grade on a curve,” if you want, and there are three options. Each option shows the scores as a grade from 0–100%, and the different curves define which scores equate to 0% and 100%. “No Curve” is the simplest, where the minimum possible score is 0% and the maximum possible score is 100%. This shows the “true scores” as it were. “Baseline to Max Score” keeps 0% equal to the minimum possible score, but then gives the highest-scoring service a 100%, and scales all the other scores in between. “Distributed Scores” normalizes the actual score range, giving the lowest scorer 0% and the highest scorer 100%, effectively spreading out the scores. This is mostly useful if you have a few scores that are very close together and you want to emphasize the differences.

You’ve also likely noticed there’s a bunch of color coding going on. The weights in column C, the normalized percentage grades in row 28, and all the individual scores use red to green color coding to show the lowest through highest values, respectively. This makes it easier to spot the low and high scorers for a particular category when looking across a row or to spot a service’s strengths and weaknesses when looking down a column. The “Score (100–300)” row is also color-coded to show alignment with your preferences once you input your weights (more on this later). Green indicates stronger alignment, while red indicates weaker alignment.

Then, of course, you have your two main charts. Weighted Ranking shows the weighted scores, ranked highest to lowest, on the curve of your choice, while Weighting shows the comparative weights of all the categories from low to high. I find this is just useful to visualize how you’ve assigned importance to the different categories and to help judge whether you need to adjust any of them.

Lastly, the “Reset” button resets all the weights to their default value of four, which may be useful if you feel you’ve strayed too far with the weighting and want to start fresh. The first time you click it, it may ask you to grant access to a script. That’s to be expected.

A few approaches to weighting

There are many ways you could approach weighting, but two methods come to mind as fairly straightforward.

First, you could go down the list and reduce the weighting for categories that matter less to you, even zeroing out any that don't matter to you whatsoever. This will give you a pool of points to use on other categories. To make this easier, your pool is shown in cell B2, to the left of the “Weight %” header. When you have excess points to assign, “Weight %” will have a light orange background, and the points will be shown to the left. When you’ve exceeded the total available points, “Weight %” will be shown with a red background and your negative point deficit will be shown to the left. The goal is to get the pool to 0 and have “Weight %” turn green. Shown right is what a first “reductive” pass might look like for me.

Next, you can assign the points from your pool to categories you care more about. If one or two categories are particularly important to you, you might double or triple their scores to 8 or 12 points. You can see I have a pool of 21.5 points to spread around.

Another approach would be to decide that there are really just two or three categories that are most important to you and to distribute the weight between them, zeroing out everything else. You could go for 50/50 weighting, 40/60, 30/30/40, or any other combo that tickles your fancy and adds up to 100.

Of course, other approaches are possible and valid. The only real constraint is that when all is done you’ve used 100 points, no more and no less, and that the top two cells indicate that accordingly.

If you think I’ve missed a particularly important approach, please feel free to let me know in the comments below.

My personal pick and how I got there

So, what service did I land on? Apple Music, and to be honest, that surprised me a little bit. I went in feeling fairly confident I’d stick with Tidal – and that’s exactly why I structured this little experiment in the way that I did. So why Apple?

First of all, Apple Music has quite a bit going for it. Much more than I realized, in fact. Of all the services, it has the highest raw score, beating Spotify by 7%. That said, without the benefit of a curve, its score is only 67%. Still, that gives it a strong head start that may be hard to overcome without some very specific weighting preferences. No matter, I feel I do have some specific weighting preferences, so let’s chat about a few of them and look at why Apple still came out on top (for me).

First of all, here are my personal weighting choices.

Let’s start by looking at the categories I weighted above the average of 4.0. At a slight bump up of 4.5 we have 3rd Party Connect. I have a Bluesound system for my living room, and outdoor speakers near my fire pit. It would be nice if whatever I chose worked with that, but I can use AirPlay in both locations, and I almost never group them.

Then, at 5.0 I have Album Sorting, Atmos/Stereo Switching, Credits, Device Sample Rate Switching, and Label Support. These are all fairly important to me because I’m a) a nerd, and b) a professional audio engineer.

Normalization and Playlist Folders each get a weight of 6.0. In the studio, I almost certainly want normalization off, but in the car, on walks, making dinner, etc. I prefer normalization to be on, and we’ve already discussed why playlist folders are important to me. At 6.5 I have Atmos and Local Files. I’m not working in Atmos yet, but I like to listen to the binaural Atmos versions of mixes and compare them to the stereo versions. I also have a huge library of local files, a great many of which simply aren’t available on streaming services, so having access to them, especially while on the go, is great.

Audio Quality gets a 7.0. That should come as no surprise. Remember: nerd, professional audio engineer. At the very top are Royalties. Most of my friends and something like 99% of my clients are musicians. Of course I want them to be paid as fairly as possible for their work when people listen to it.

When it’s all said and done and I look at the scores without a curve, my weightings have bumped Apple down 3% from 67 to 64%, and Tidal up 4% from 58 to 62%. This tells me a few things. First, you can tell something about a streaming service’s “alignment” with your values by whether its weighted score increases or decreases compared to its unweighted score. Therefore, Apple is a little less in alignment with my values, while Tidal is a little more in line. This may be worth bearing in mind if your top two services are fairly close in weighted scores, which in this case – at only 2% apart – they are.

Here’s what my weighted scores look like.

We can also look at the scores on the two available curves to tease out a little more of the difference if we want. In particular, “Distributed Scores” can give an interesting perspective of just how close the top two are when the bottom scorer is set to 0%.

Still, at the end of the day, Apple Music and Tidal are very close for me. Apple wins by the numbers, but Tidal is a little more in line with my own priorities. So, let’s call an audible and look at them side-by-side. Interestingly enough, it kind of comes down to my two 6.5 weighted categories: Atmos and Local Files. I would prefer to listen to the Dolby binaural version of Atmos mixes over than Apple’s proprietary spatial-to-binaural approach, but it’s more of a curiosity, plus Apple makes switching between stereo and binaural nearly seamless, which is a nice touch. Streamable local files are a revelation though! I’ve been rediscovering so much of my collection that I haven’t listened to in years, and I’m positively loving it. Honestly, it would almost be worth the price alone, just to be able to stream my whole collection anywhere in the world (and yes, I know there are other ways of doing that, several less costly). Throw in all the other stuff Apple Music is good at though and it does feel like the right choice for me. At least for now.

Strengths and weaknesses of each music streaming service

Ok, to wrap things up, let’s take a quick look at the strengths and weaknesses of each streaming service. This will basically be a narrative to accompany the numbers, based on my experience demoing all these services.

Amazon music unlimited

If, above all else, you’re after hi-res, and also want the native Dolby binaural Atmos render experience with near-seamless switching between binaural and stereo, Amazon might be the service for you. However, its royalty payouts are right down at the bottom with Spotify, and it’s got plenty of other shortcomings, from a lack of collaborative playlists and label support to an absence of playlist folders and a private listening mode. As a mastering engineer, track-based normalization also irks me, as it messes with inter-song loudness balances on albums, but others may be less bothered.

Apple Music

As we’ve already discussed, Apple Music has a lot going for it, but I would say its three strongest suits are excellent local file support (it will even replace MP3/AAC versions with the lossless/hi-res ALAC versions from its streaming and store catalog, if available), label support that allows you to click through and browse entire discographies, and lossless, hi-res audio quality. It does have a few shortcomings though, several of which are downright odd. First, Apple does the handoff thing between macOS and iOS devices so well with many applications that it makes its absence here even more conspicuous. Coupled with a song-level listening history that’s a bit buried (album or playlist history is more easily accessible), it makes picking up on one device where you left off on another less smooth than you would expect for Apple. In a similar vein, I can switch my device sample rate in the Audio MIDI Setup application, so why can’t Music do it natively? A bit frustrating, maybe, but probably not enough to turn most away who value the other things Apple does well.

Deezer

If you’re a current Spotify user and looking to make the jump to lossless audio, but are fine with CD quality and don’t necessarily care about hi-res, Deezer feels like the most direct competitor in a lot of ways. I liked its browsing experience even more than Spotify, it gives you a voucher for a free, full collection transfer from other services, has better local file support in that a limited number can be streamed, has a fairly decent native remote control app (via Deezer Connect), pays slightly better royalties and is cheaper to boot. The big missing features are playlist folders and a true private mode. The discovery algorithm took a little while to get up to speed, but once Deezer had copied over my collection from Tidal and sat on it for a week or two, the Discovery and New Releases playlists started to get quite good. The flow feature is also quite good. Easily the top suggestion for the non-hi-res, non-Atmos crowd (all else being equal), despite the same normalization gripe as Amazon.

Napster

Ohh, Napster… I wish I had more good things to say about you. You are cheap-ish, offer CD quality, and have fairly decent cross-device history and synchronization, but there’s just not a lot else going on. It sort of feels like you didn’t try, and your unweighted, un-curved score of only 21% reflects that. What’s more, I don’t think there’s any weighting combination where Napster comes out on top. Not recommended.

Qobuz

Qobuz is an interesting one, and I might characterize it as something like the purist’s choice. While its raw score is a slightly underwhelming 45%, if your priorities are audio quality, collaborative playlists, credits, curation & editorial, device sample rate switching, a free library transfer, label support, and royalty payouts, it not only scores perfectly but takes an impressive 47% lead over the next closet service. Practically speaking, if you’re the type of person who prefers to listen to albums over playlists, enjoys good editorial and interviews, doesn’t care about Atmos, local files, or normalization, and generally knows what they want to listen to, Qobuz may be a great fit. Just be prepared for a sub-par experience when it comes to Atmos, browsing, discovery, local file support, lyrics, a native remote control, normalization, playlist folders, and any sort of private listening mode.

Spotify

It’s hard to deny it. Spotify still feels like the most mature and full-featured application. If you can overlook Atmos, audio quality, a free library transfer, label support (which frustratingly used to exist and has been removed), and anything remotely resembling fair royalty payouts, you may want to stick with Spotify (assuming you already use it). However, and I’ll admit some bias here, if by some chance you’re looking to pick your first streaming service, or make a switch from something other than Spotify, I would have to suggest Apple Music or Deezer. The improvement in audio quality you get with any other service (excepting YouTube Music) is noticeable, even by most who claim to be casual – rather than professional – listeners. More to the point, Spotify’s artist payout model is abysmal, and recently they have made a change wherein a song must be streamed at least 1,000 times in a 12 month period to receive royalty payouts. If it doesn’t reach that minimum, the royalties that would have gone to that artist are redirected to bigger artists.

Tidal

Tidal is a great Amazon alternative if you don’t mind trading off near-seamless Atmos/stereo switching for some other really nice features. Areas in which Tidal shines in comparison to Amazon include an editorialized “magazine,” playlist folders, album-based normalization (thumbs up), a marginally better discovery experience, and a 3.25 times higher royalty payout rate. Stereo-to-Atmos switching currently requires jumping between releases, which makes direct A/B comparison cumbersome, but is fine otherwise – and you still get the native Atmos binaural render. There are a few things you may find yourself missing on Tidal, though, such as no collaborative playlists, crossfade, label, or local file support, no native remote control or private listening mode, and the highest price point in the market at $20/month (although if you want to forgo hi-res audio, a CD-quality tier is available for the same cost as Spotify).

YouTube music

YouTube Music caught me a little off guard and might be the best candidate for an “add-on” music streaming service – in other words, a secondary service to fill in the gaps of a primary service. The things it does notably well include a very good browsing experience, collaborative playlists, great cross-device history and synchronization, a unique and compelling discovery mode, local file support just shy of Apple’s, well-integrated lyrics, a great private mode, and engaging radio streams. The interesting thing about YouTube Music is that it seems to either do things really well, or really poorly. It kind of falls on its face when it comes to album sorting, Atmos, audio quality, credits, crossfade support, curation or editorial, a free library transfer, label support, a native remote, and playlist folders.

By taking the best of YouTube Music and supplementing its shortcomings with another service, you can get the best of quite a few categories. A few interesting combinations that could be worth considering:

YouTube Music + Qobuz: This has an unweighted score of 68%, just beating Apple’s flat 67%.

YouTube Music + Apple Music: If you’re after a more “conventional” streaming service than Qobuz for your YouTube Music alternate, Apple Music also makes for – unsurprisingly – a powerful combo, with an unweighted score of 69%.

YouTube Music + Tidal: If you’ve got some spare change to spend, this is perhaps the most potent combination, as the two fill in each other’s gaps very nicely. Even considering the extra expense, the unweighted score is an impressive 72% – or is that impressive?

So, which streaming service is best?

As you can hopefully see at this point, the answer has to be, “It depends.” They all have their own strengths and weaknesses – except Napster, sorry – and what’s most important to you can, and I would argue should, help determine which music streaming service is best for you. My hope is that by approaching this question from a few different angles, I’ve helped you figure out which music streaming service is best for you.

Additionally, if you’re a musician who wants your music to sound the best it can on any and all streaming services, please don’t hesitate to get in touch via the contact page. I’d love to work with you to help realize your musical vision.

So, which streaming service did you land on? Do you have any questions? Are there any crucial factors you think I’ve left out? Sound off in the comments below and I’ll respond to as many of them as I’m able. Thanks, and happy streaming!